Generating Rappers with StyleGAN2

|

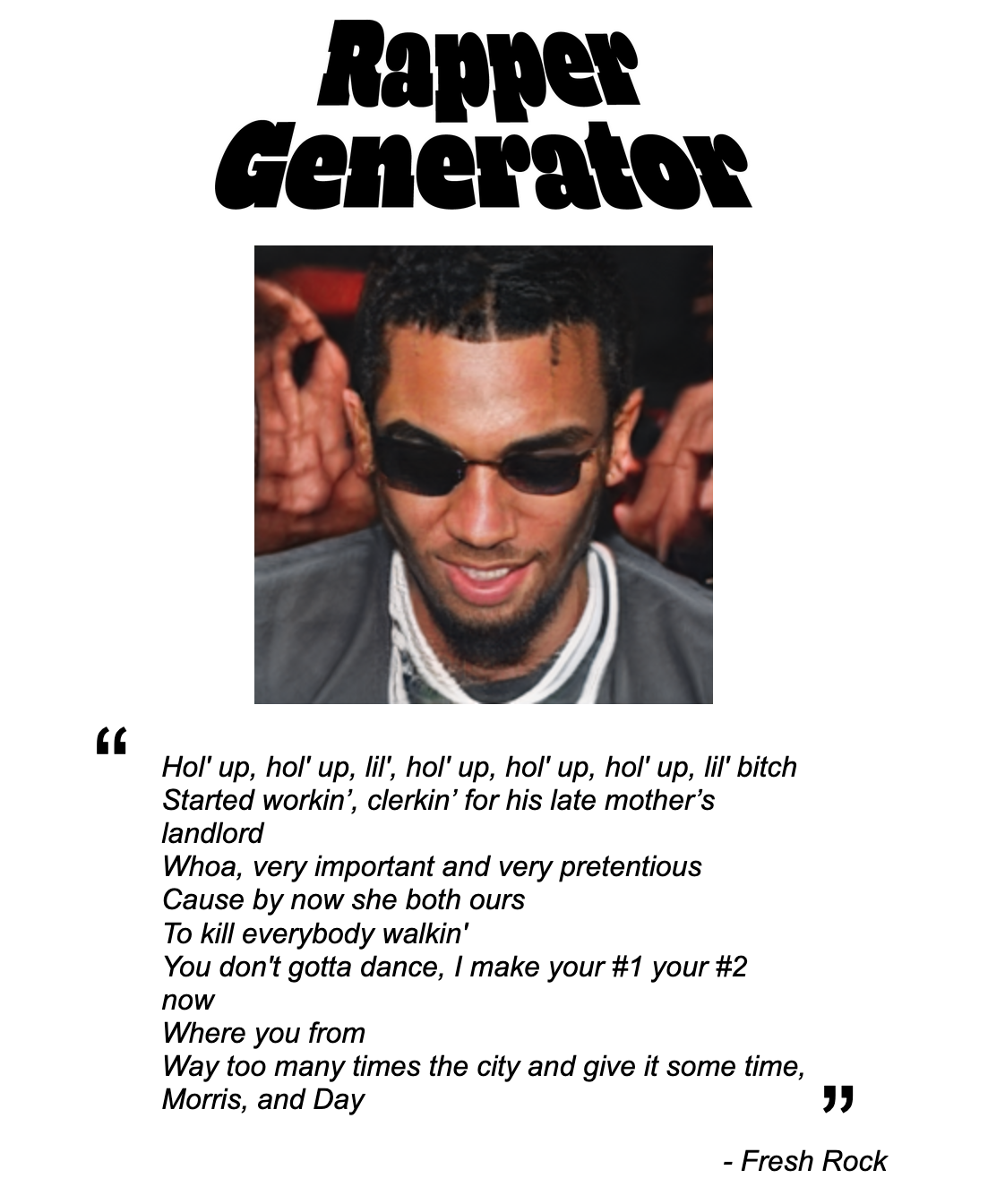

| Rapper Generator website |

Go and check out the Rapper Generator at https://franciscosalg.neocities.org/rappergenerator/ !

This post details how I trained the StyleGAN2 [1] model, which powers the Rapper Generator website.

StyleGAN2 is the state-of-the-art generative adversarial network (GAN) for generating high quality images. It was released in 2020 by NVIDIA and it fixed most of the artifacts in the images generated by the original StyleGAN.

All the training data was put together by scrapping rappers’ Instagram pages for photos of their faces.

Keep reading for more details.

Collecting a Dataset of Rappers Faces

Training a state-of-the-art GAN requires thousands of pictures and many hours of GPU time. FFHQ [2], the face dataset used by NVIDIA to train StyleGAN2, contains 70,000 images.

So instead of training the network from scratch, I finetuned one of the provided StyleGAN2 checkpoints on my own smaller dataset which contained around 5k images.

First I had to put together a list of rappers’ Instagrams, whose images I wanted to download. I browsed around and found Genius keeps a bio for each artist with links to their social media. I just had to scrap this info from rappers pages.

Rap Genius API

Genius (formerly known as Rap Genius) is a website focused on providing annotations and interpretation of song lyrics, mainly focused on hip hop. On top of that, it provides metadata such as the album the song belongs to, associated acts, links to the artist’s social media, etc.

All this info can be acessed through the provided API, which requires registering the app making the requests. However, if you access via https://genius.com/api instead of https://api.genius.com, you don’t need to provide an Acess Token, which simplifies everything.

Each artist info is accessed through the endpoint https://genius.com/api/artists/:id. Not any ID would work as I was only interested in rappers. By looking up the most popular songs in the hiphop charts, I could get a list of IDs of the most popular artists and from there get their Instagram handles.

The API link for this looks like `https://genius.com/api/songs/chart?page=PAGE_NUMBER&per_page=NUM_SONGS_PER_PAGE&time_period=TIME_PERIOD&chart_genre=rap’ replacing TIME_PERIOD with “all_time”, “month”, “week” or “day”.

Parsing the returned data is a piece of cake with the BeautifulSoup module. See the script find_rappers_instagram.py.

Unfortunately the charts API only shows a limited number of songs. In total, I collected 255 Instagram handles this way, which would have to do.

Instagram Downloader

I used Instaloader to download all the pictures from each of the Instagram pages. This tool is great because it ensures enough timing between requests so that your IP is not blocked by Instagram.

Cropping Images

For detecting the faces and cropping the images into 256x256px, I used the align_images.py from https://github.com/nikhiltiru/stylegan2. It uses MTCNN to detect the faces and align them. I made some slight modifications to ensure a minimum resolution of the detected faces (based on eye distance) and only include images where the edges are not visible.

In the end I had over 20k crops of faces. I had to go over all the crops one by one, to remove the images with noise, poor lighting, motion blur and kids faces. The final dataset had around 4.7K images. Here are some samples:

|  |  |  |

Training StyleGAN2

I needed a checkpoint trained on 256x256px images, which was not available for the original StyleGAN2 repository.

I found one from the implementation at https://github.com/rosinality/stylegan2-pytorch, however finetuning from this repository at different learning rates did not produce satisfactory results.

After searching a bit more, I found the a newer repository from NVIDIA, StyleGAN2-ADA[3] https://github.com/NVlabs/stylegan2-ada, aimed at training with less samples and provides checkpoints for multiple resolutions and architectures, including 256x256px.

The models were trained on Google Cloud Platform. Each training experiment took only a couple hours to finetune the models on a n1-highmem-8 preemptible instance with a Tesla P100 GPU.

For the first experiment, I only finetuned the given checkpoint on my own dataset. I achieved an FID of 10.8 and the quality of the produced images had degraded significantly. For my second experiment, I took some cues from Freeze-D [4] and froze the discriminator up to the 3rd layer. This lowered the FID to 9.5 and reduced the artifacts on the generated images. Below you can see some samples obtained with this final model.

Untrucated samples:

|

Samples Psi = 0.5:

|  |  |  |

Samples Psi = 0.7:

|  |  |  |

Looking at the results, psi=0.7 introduces enough variation to produce novel faces without introducing many artifacts. To keep things interesting, all images for the website were generated at this psi.

Lyrics and Rapper Names

As just displaying the generated images would look too plain, I came up with a simple model to write new rap lyrics.

I scraped the top rap songs from Genius and trained a Markov Chain model on them to write 8 bars every time it is run.

The relevant code is in lyrics_scraper.py and markov.py.

Website

I’ve created a website named “Rapper Generator” to display the generated images.

As it would become too costly to have a dedicated server generating samples of faces on the fly, I had to generate them beforehand. I generated around 1500 images (at psi=0.7) and 1000 lyrics, which are displayed randomly everytime the page is refreshed.

Conclusion

You can find the scripts for this experiment on my github.

The quality of photos uploaded to Instagram is all over the place and there is a lot of variation: hands/objects covering face, makeup and acessories, artificial lighting, complex backgrouds, bokeh effect, blur… All of these combined result in a noisy dataset, despite all the effort I put into manually sorting the training pictures. With less than 5k pictures, learning this distribution becomes a hard task for StyleGAN2.

The results are impressive and make for a great laugh: the rappers are wearing accessories such as sunglasses, earrings, chains and hats. There aren’t as many generated faces with tattoos and colored hair as I’d hoped for, so maybe I should’ve only allowed for soundcloud rappers in the training data.

I would also like to address why the final model seems so biased towards DJ Khaled and dark skin males. It just happens that most top charting hip hop artists are male and has a result, most photos downloaded contained male faces, skewing the data distribution. As for DJ Khaled, he just has an unhealthy ammount of Instagram posts (33k) and most of them are selfies.

(Doesn’t it remind you of the heated discussion on the PULSE algorithm on Twitter?)

|

| Wholesome Khaled |

As for the lyrics, it would be interesting to look into deep learning approaches (GPT2, LSTM) in order to achieve semantic coherence and rhymes in the generated text.

References

[1] Tero Karras (NVIDIA), Samuli Laine (NVIDIA), Timo Aila (NVIDIA) “A Style-Based Generator Architecture for Generative Adversarial Networks” https://arxiv.org/abs/1812.04948

[2] Tero Karras (NVIDIA), Samuli Laine (NVIDIA), Timo Aila (NVIDIA) “A Style-Based Generator Architecture for Generative Adversarial Networks” https://arxiv.org/abs/1812.04948

[3] Tero Karras, Miika Aittala, Janne Hellsten, Samuli Laine, Jaakko Lehtinen, Timo Aila “Training Generative Adversarial Networks with Limited Data” https://arxiv.org/abs/2006.06676

[4] Sangwoo Mo and Minsu Cho and Jinwoo Shin “Freeze the Discriminator: a Simple Baseline for Fine-Tuning GANs” https://arxiv.org/abs/2002.10964